Utforsk evnene til DALL·E 2, en avansert tekst-til-bilde-modell utviklet av OpenAI. Oppdag den banebrytende teknologien bak DALL·E 2 og dens innvirkning på feltet bildegenerering.

Innholdsfortegnelse

Introduction

DALL·E, DALL·E 2, and DALL·E 3 are cutting-edge text-to-image models developed by OpenAI. These models utilize deep learning techniques to generate digital images from natural language prompts. In this article, we will explore the capabilities of DALL·E 2, its underlying technology, and its impact on the field of image generation.

History and Background

DALL·E was initially introduced by OpenAI in January 2021 as a modified version of the GPT-3 model. It was capable of generating images based on textual descriptions. Following the success of DALL·E, OpenAI released DALL·E 2 in April 2022. This successor model aimed to generate more realistic and higher-resolution images by combining concepts, attributes, and styles. After a beta phase, DALL·E 2 was made available to the public in September 2022. OpenAI continued to refine their image generation models and unveiled DALL·E 3 in September 2023, which boasted an enhanced understanding of nuance and detail compared to its predecessors.

Technology

The DALL·E models are multimodal implementations of the GPT-3 model, which is based on a Transformer architecture. DALL·E's model, specifically DALL·E 2, incorporates 12 billion parameters. It combines textual prompts with pixel inputs and is trained on a vast dataset of text-image pairs sourced from the internet.

One of the key components in the DALL·E system is the Contrastive Language-Image Pre-training model (CLIP), which learns the relationship between textual semantics and visual representations. CLIP is trained on hundreds of millions of image-caption pairs, enabling DALL·E to understand how textual concepts manifest in the visual space.

Se også

DALL·E 2's Image Generation Process

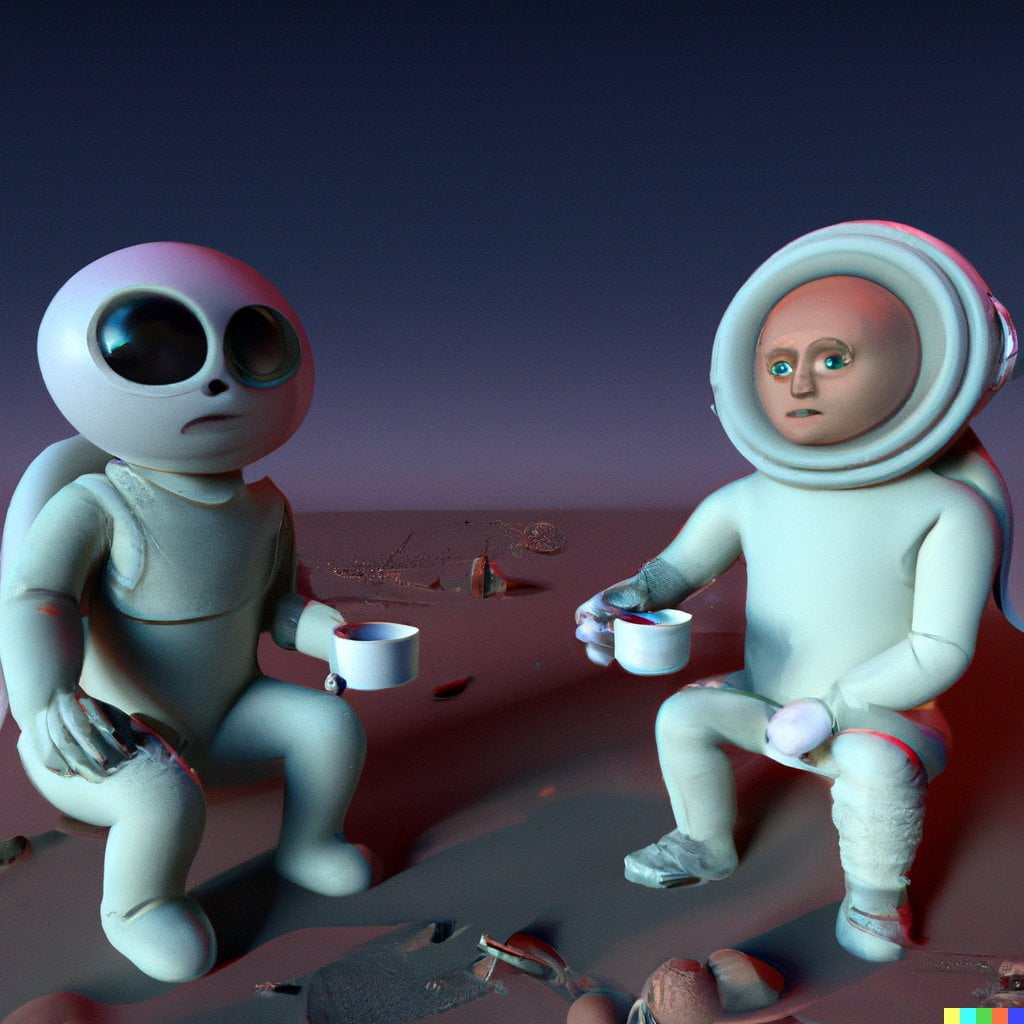

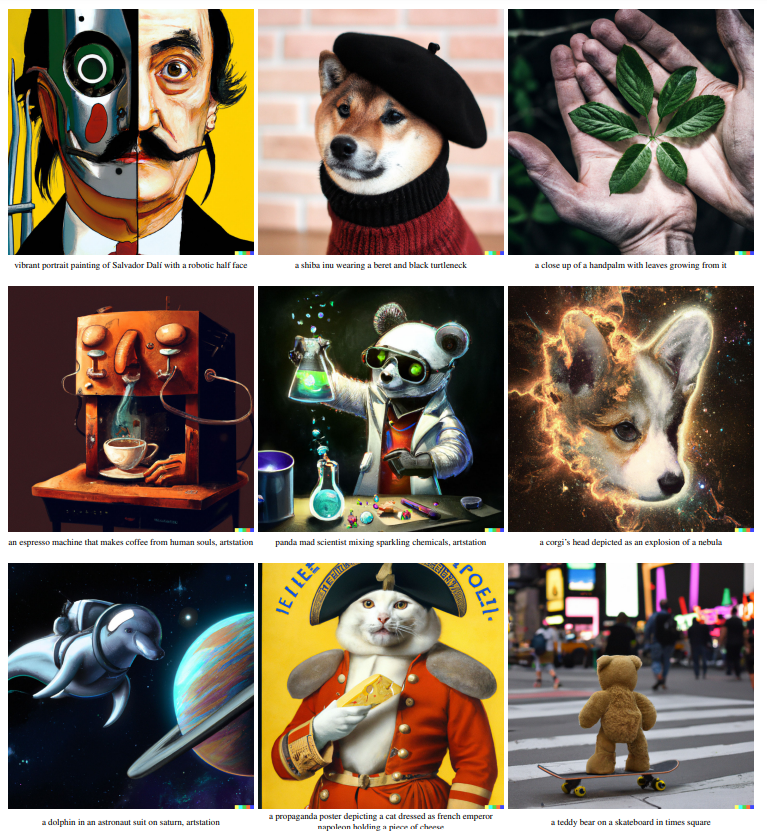

DALL·E 2 employs a straightforward process to generate images based on text prompts. Firstly, the input prompt is processed, and the model generates an output image that aligns with the provided description. For example, a prompt like "a teddy bear riding a skateboard in Times Square" would result in an image of a teddy bear skateboarding in the iconic Times Square.

To achieve this, DALL·E 2 leverages its multimodal capabilities by combining textual and visual abstractions. The model learns to link related textual and visual concepts through its training on large-scale datasets. This allows DALL·E 2 to generate semantically plausible images that accurately represent the given prompts.

Image Manipulation and Interpolation

Aside from generating novel images, DALL·E 2 also excels in image manipulation and interpolation. It can modify existing images while maintaining their salient features. This capability allows users to create variations of images by altering specific attributes or styles. Furthermore, DALL·E 2 can interpolate between two input images, seamlessly blending their characteristics to produce visually appealing results.

Ethical Considerations

While DALL·E 2 showcases remarkable advancements in image generation, concerns have been raised regarding potential ethical implications. Algorithmic bias can be observed in the generated images, such as gender representation disparities. OpenAI acknowledges these limitations and continues to work towards addressing biases and improving the fairness of their models.

Se også

Conclusion

DALL·E 2 represents a significant milestone in the field of image generation. Its ability to generate high-quality images based on textual prompts, manipulate existing images, and interpolate between them opens up new possibilities in creative applications. As OpenAI continues to refine and expand upon their image generation models, the boundaries of what is achievable in the realm of AI-generated imagery are being pushed further.

Hva vil Wiki fortelle oss?

DALL·E 2, a successor to the original DALL·E model, was announced by OpenAI on April 6, 2022. This new model was designed to generate more realistic images at higher resolutions and had the capability to combine concepts, attributes, and styles. It entered into a beta phase on July 20, 2022, with invitations sent to 1 million waitlisted individuals. During this phase, users were able to generate a certain number of images for free each month and had the option to purchase more. In September 2022, DALL·E 2 was made available to everyone and the waitlist requirement was removed. OpenAI continued to iterate on their image generation models and in September 2023, they announced the release of DALL·E 3. This latest model boasted an enhanced ability to understand significantly more nuance and detail compared to its predecessors.

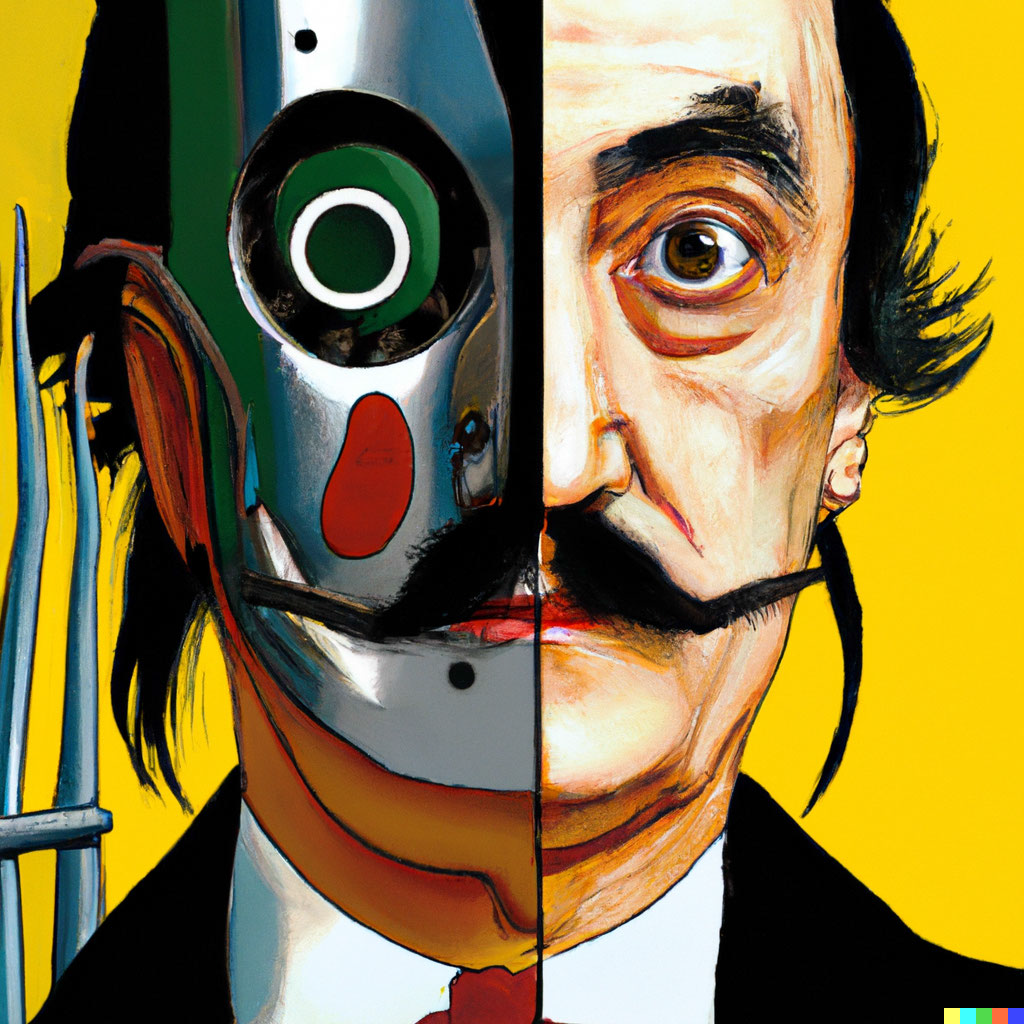

DALL·E's model is a multimodal implementation of the GPT-3 model with 12 billion parameters. It swaps text for pixels and is trained on text–image pairs from the internet. The input to the Transformer model consists of a sequence of tokenized image captions and tokenized image patches. The model can generate images in various styles, including photorealistic imagery, paintings, and emoji. It also has the capability to manipulate and rearrange objects in its generated images, as well as infer appropriate details without specific prompts, such as adding Christmas imagery to celebration-related prompts. DALL·E exhibits a broad understanding of visual and design trends and can generate images for arbitrary descriptions from different viewpoints. It is able to blend concepts and demonstrates visual reasoning abilities, such as solving Raven's Matrices.

DALL·E 2 introduces image modification capabilities, including the ability to produce variations of existing images and edit them based on given prompts. It can inpaint and outpaint images by filling in missing areas or expanding the image beyond its original borders while maintaining the context of the original image. Despite its impressive capabilities, DALL·E 2 has its limitations. It may struggle with distinguishing between different word orders, fail to generate correct images in certain circumstances, and exhibit biases due to the training data it relies on.

Concerns have been raised regarding the ethical implications of models like DALL·E 2. Algorithmic bias can be observed in the generated images, such as a higher representation of men compared to women. OpenAI has taken steps to address bias and offensive content through prompt-based filtering and image analysis. There are also concerns about the potential misuse of these image generation models to create deepfakes and propagate misinformation. OpenAI has implemented measures to mitigate these risks by rejecting certain types of prompts and uploads. However, the popularity and accuracy of models like DALL·E 2 have raised concerns about potential technological unemployment for artists, photographers, and graphic designers.